Page Not Found

Page not found. Your pixels are in another canvas.

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Page not found. Your pixels are in another canvas.

About me

This is a page not in th emain menu

Published:

Control systems have long relied on math, not language. But what if large language models could sit in the control loop — interpreting signals, reasoning about intent, and tuning behavior in real time? This hybrid paradigm — learning-in-the-loop — could merge symbolic reasoning with continuous control, enabling robots that talk, learn, and act coherently.

Published:

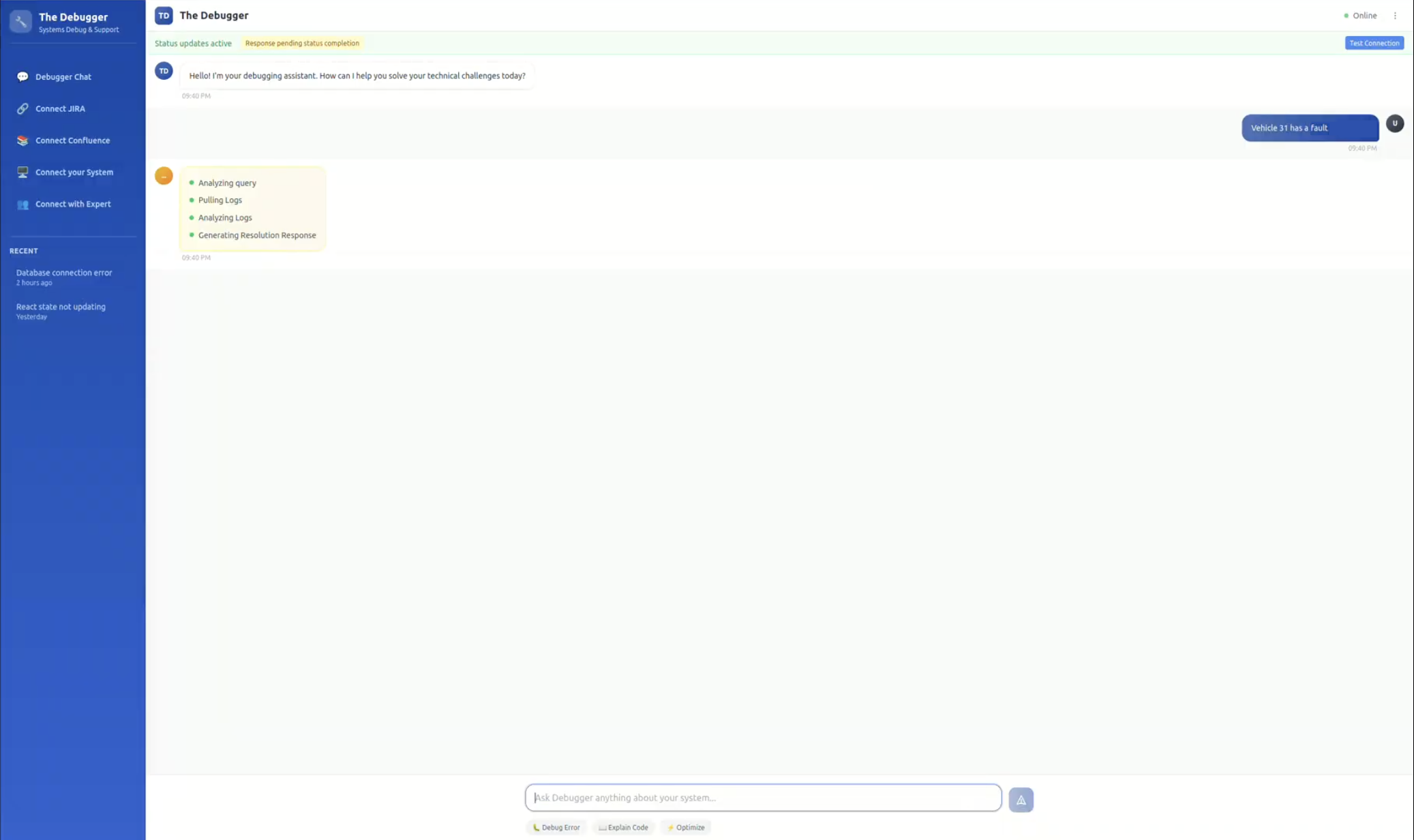

In most factories, debugging a failure involves staring at logs, cross-checking sensor data, and guessing where things went wrong. My multi-stage debugging agent emulates this process. It first locates the “error tick” in the logs, analyzes correlated sensor data, builds hypotheses, and refines them with context from prior errors. It doesn’t just answer “what went wrong,” but also why — by connecting data, context, and code knowledge.

Published:

In robotics and automation, downtime is deadly. I’ve been working on a system called The Debugger — an AI-driven troubleshooting agent that understands logs, analyzes system behavior, and even hypothesizes root causes like an engineer would. It integrates with real robots via Raspberry Pi hardware, pulling logs over MQTT and using an LLM-based reasoning layer to identify issues. Imagine a robotic technician that reads your logs, diagnoses faults, and guides you through recovery — in seconds, not hours.

Published:

I’ve been conceptualizing GRAL (General Robotic API Layer) — a high-level interface that allows robots to understand, execute natural-language commands and also provide brain support for robots to undersand and reason their environment better. Instead of diving into ROS2 nodes and topics, you could simply say: “Navigate to the conveyor belt and pick up the red box.” GRAL decomposes this into primitive skills like navigation, picking, and placing, generates the required code, and executes it. The goal: bridge the gap between human intent and robotic action — seamlessly. GRAL can also try understanding the environment and by taking the sensory inputs and supply them as rich contextual info to other nodes and algorithms of the robotics stack. For example: there is a crowd in the robots shortest path and no crowd in a bit longer path, the GRAL can help in making robot understand that by adding additional cost in that path making it take a longer path becuase the shorter one is through a crowded environment.

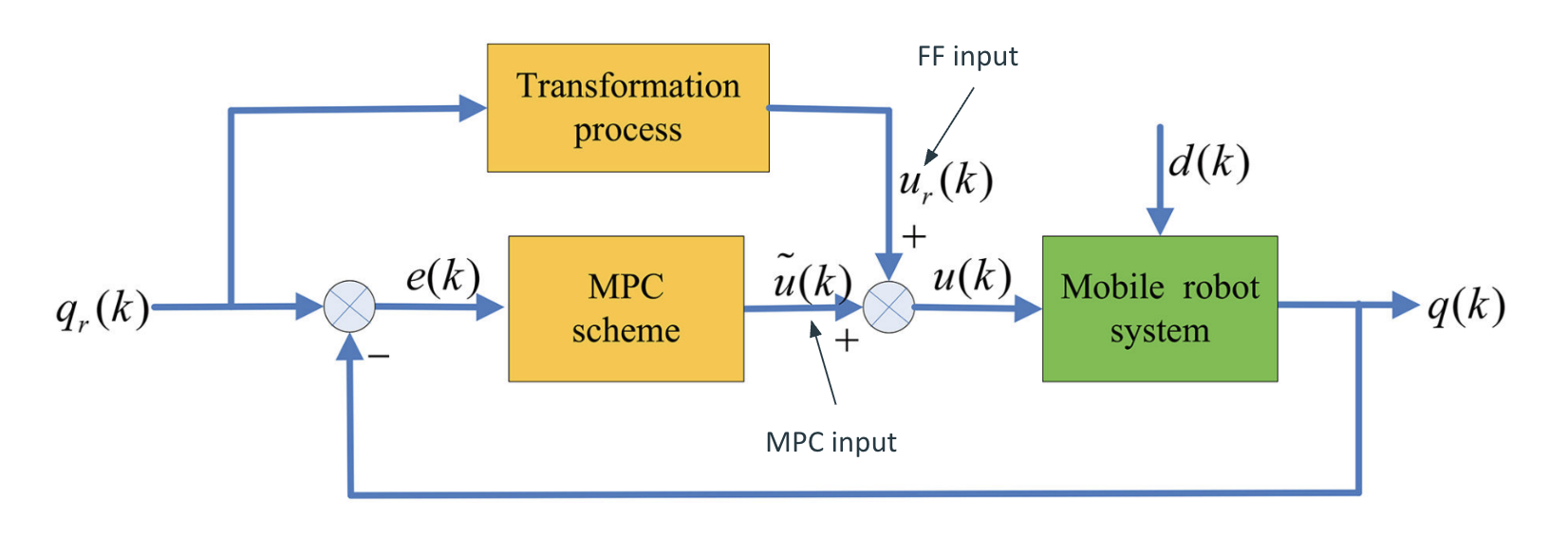

Differential Drive, MPC, Feedforward Control

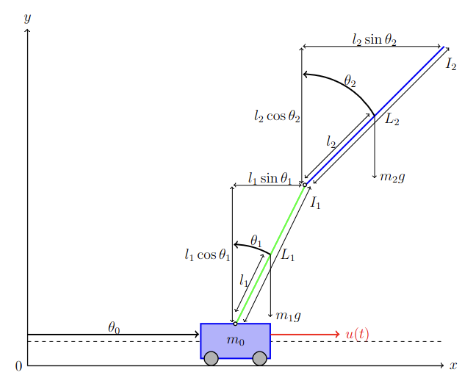

LQR, Pendulum, Dynamics

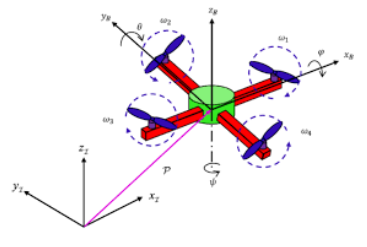

Explore my work in Model Predictive Control, guiding drone with mpc based trajectory planning and obstacle avoidance

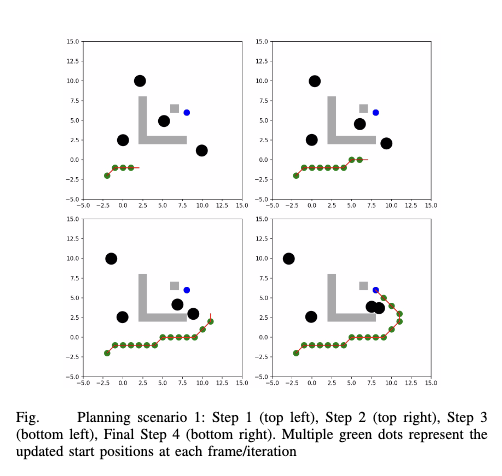

A*, Dynamic Obstacle Avoidance

RAG, LLM, Vector Database, Agent, Tool Use, Systems Connection

HJR, Path Planning

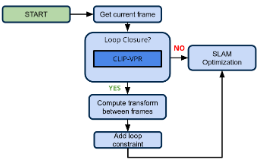

Using Language based model for loop closure detection

Pytorch, MNIST, CNN

SLAM, Sensor Fusion, Particle Filter, PID

Forward and Inverse Kinematics, Path Planning, Computer Vision, Object Detection

ROS, Computer Vision, PID, Tag Detection

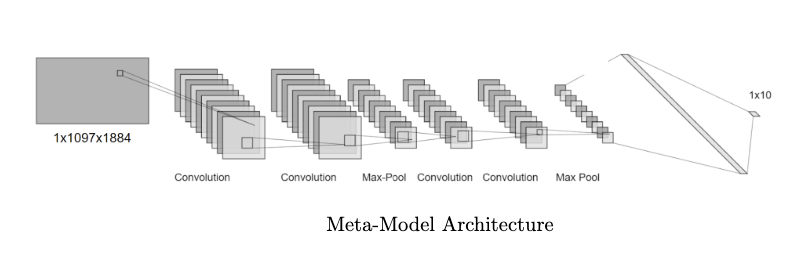

Raspberry-pi camera, supervised learning, Depth

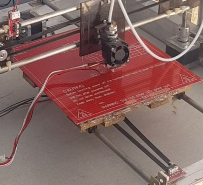

Design, Hardware Interfacing, G-code Generation, Marlin Firmware, Slicing

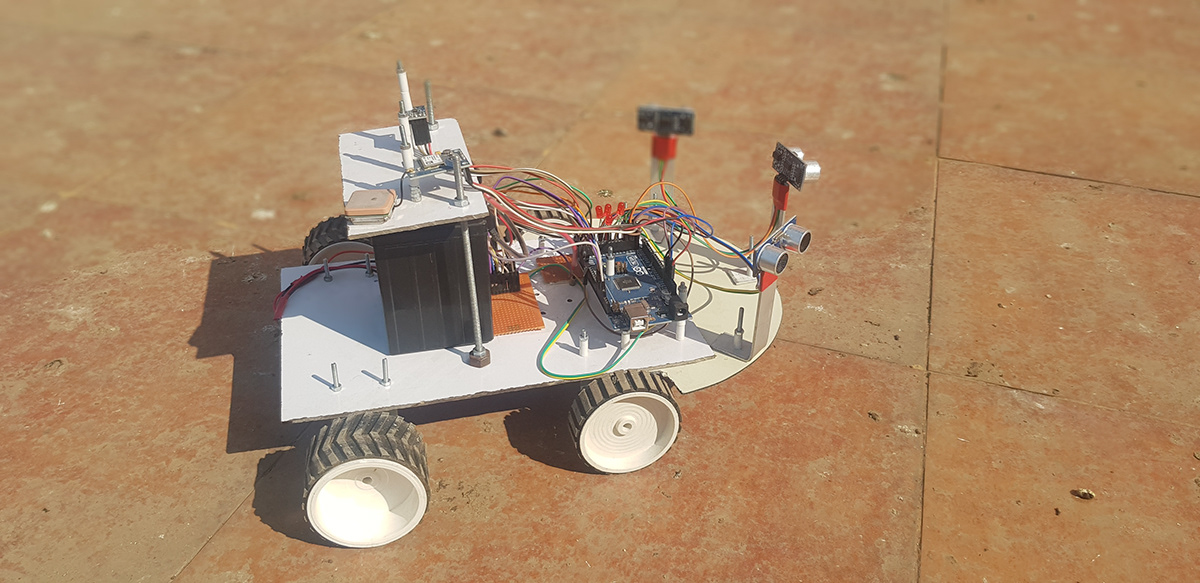

Arduino Mega, GPS Sensor, IMU, Ultrasonic Sensor, Motor Driver

Published in Journal 1, 2009

This paper is about the number 1. The number 2 is left for future work.

Recommended citation: Your Name, You. (2009). "Paper Title Number 1." Journal 1. 1(1). http://academicpages.github.io/files/paper1.pdf

Published in Journal 1, 2010

This paper is about the number 2. The number 3 is left for future work.

Recommended citation: Your Name, You. (2010). "Paper Title Number 2." Journal 1. 1(2). http://academicpages.github.io/files/paper2.pdf

Published in Journal 1, 2015

This paper is about the number 3. The number 4 is left for future work.

Recommended citation: Your Name, You. (2015). "Paper Title Number 3." Journal 1. 1(3). http://academicpages.github.io/files/paper3.pdf

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.

Graduate course, University of Michigan, Robotics Department, 2023